Visualizing Speech Melody to Improve Second Language Expressive Fluency in Adolescents is a research project in collaboration with Rupal Patel (Speech pathology, CadLab) and funded by Tier 1 Interdisciplinary Research Seed Grant Program, Provost Office, Northeastern University ($50,000).

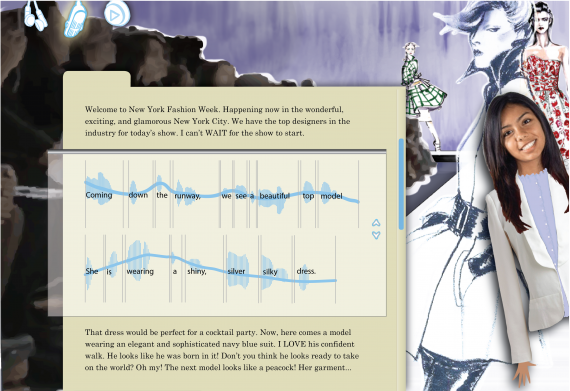

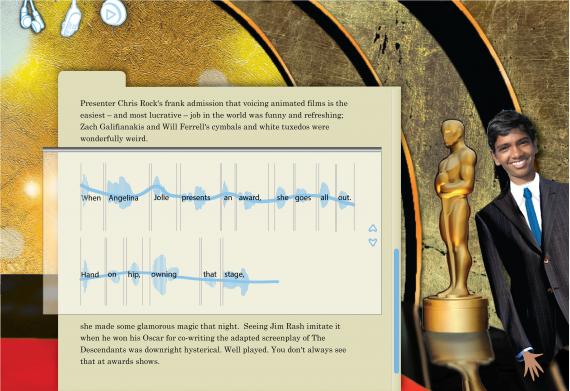

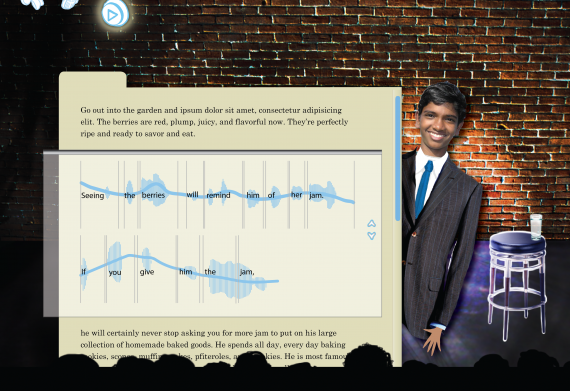

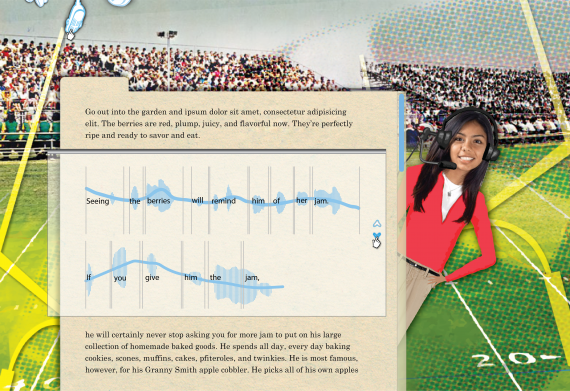

I worked on the concept and design of interface, icons and navigation for prototype of interactive tablet application The functionality and look and feel of the interface were designed in collaboration with Laura Upham, a former student and, at the time, a graphic designer (NU ’11). The concept was that of a virtual television studio scenario as the context for reading activities. Students would include their photos, which is then added to the character in the scenario, depending on their chosen set as depicted in the sample images.

The final output was the iPrompt-U software which provides auditory and visual cues about speech melody to supplement written text. The software was targeted at adolescent English second language learners who struggle to read aloud with appropriate melody which in turn impacts comprehension. Pilot tests to assess whether L2 learners can make use of visual prosodic cues to improve reading fluency were conducted within the Conversational Speech Workshops conducted through the NU global initiative. We noted that reading fluency improved when students received visual and auditory models compared to the conventional method of repeating an auditory model alone thus providing further evidence for our hypothesis.

> Story published at: www.northeastern.edu/news/2012/05/language-learning/